Research

Selected works along my research line on agentic system design, reasoning reliability, and scalable learning.

System Level — Agentic System Architecture and Scalable Multi-Agent Autonomy

-

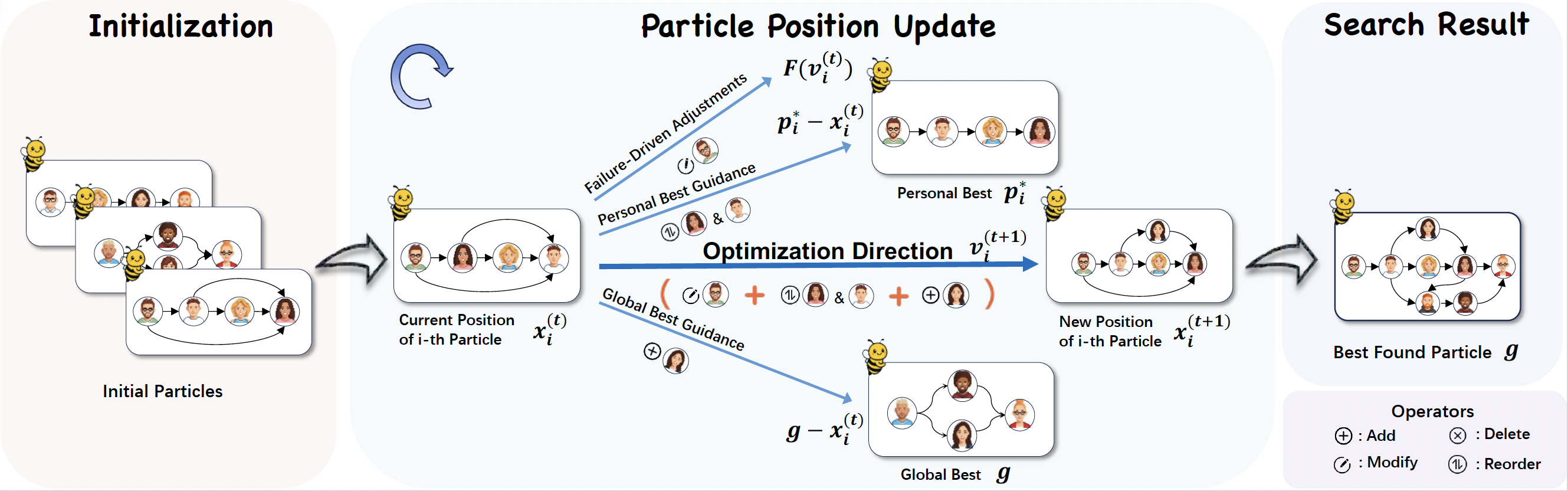

EMNLP 2025 (Main)SwarmAgentic is a framework for fully automated agentic system generation that constructs agentic systems from scratch and jointly optimizes agent functionality and collaboration as interdependent components through language-driven exploration. It reformulates particle swarm optimization into interpretable text-symbol updates over agent roles and coordination structures, enabling efficient exploration of the agentic system design space.Scalable Autonomy Automated Agentic System Generation Swarm Intelligence

EMNLP 2025 (Main)SwarmAgentic is a framework for fully automated agentic system generation that constructs agentic systems from scratch and jointly optimizes agent functionality and collaboration as interdependent components through language-driven exploration. It reformulates particle swarm optimization into interpretable text-symbol updates over agent roles and coordination structures, enabling efficient exploration of the agentic system design space.Scalable Autonomy Automated Agentic System Generation Swarm Intelligence -

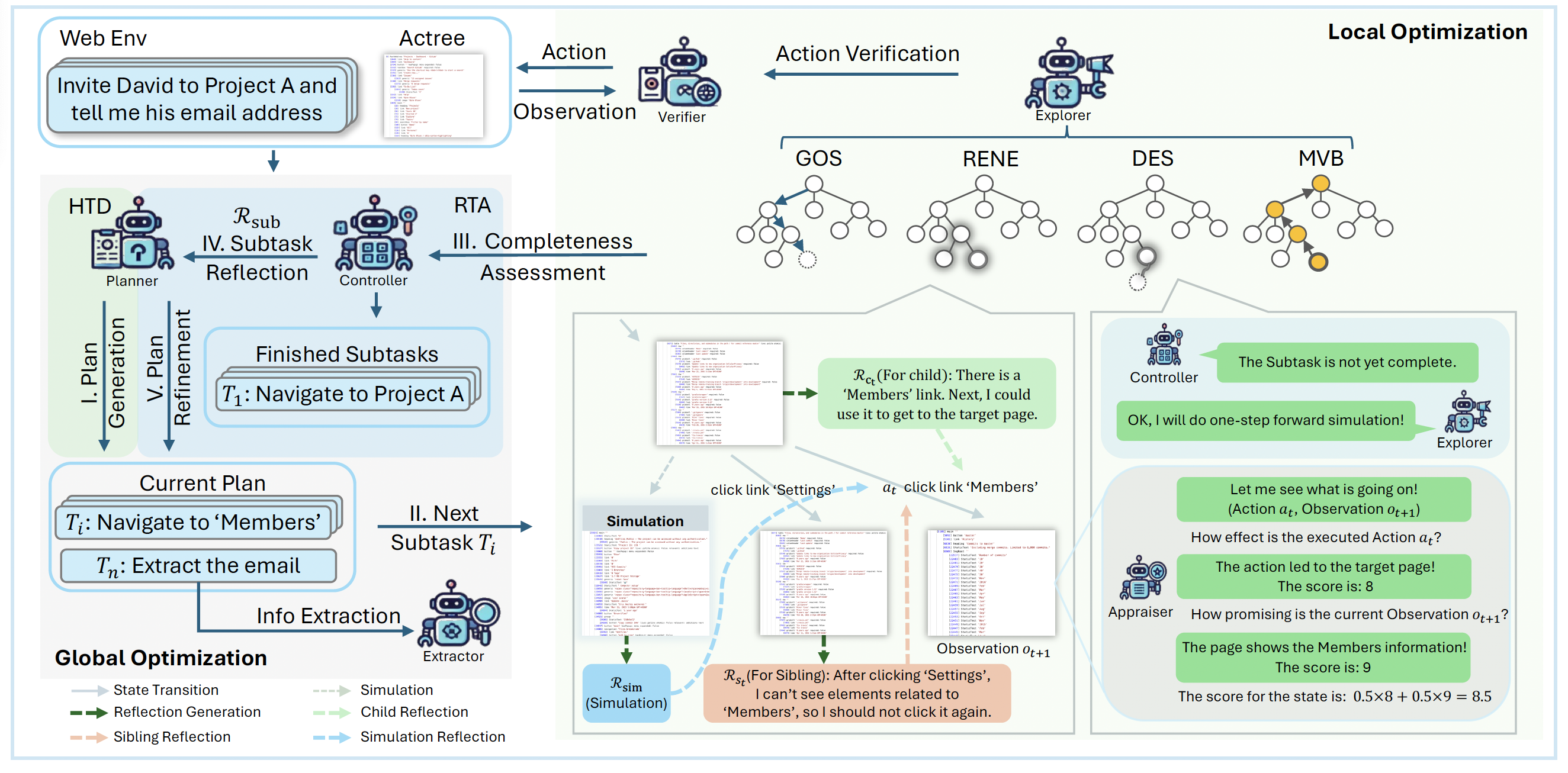

AAAI 2025WebPilot is a multi-agent system with a dual optimization strategy that improves MCTS to better handle complex web environments. It uses Global Optimization for high-level planning and Local Optimization for executing subtasks, achieving SOTA performance on WebArena with a 93% relative increase in success rate.Web Agents Monte Carlo Tree Search Multi-Agent Systems Reflection-Based Optimization

AAAI 2025WebPilot is a multi-agent system with a dual optimization strategy that improves MCTS to better handle complex web environments. It uses Global Optimization for high-level planning and Local Optimization for executing subtasks, achieving SOTA performance on WebArena with a 93% relative increase in success rate.Web Agents Monte Carlo Tree Search Multi-Agent Systems Reflection-Based Optimization

Reasoning Level — Process-Level Reasoning and Policy Alignment for Reliable Decision-Making

-

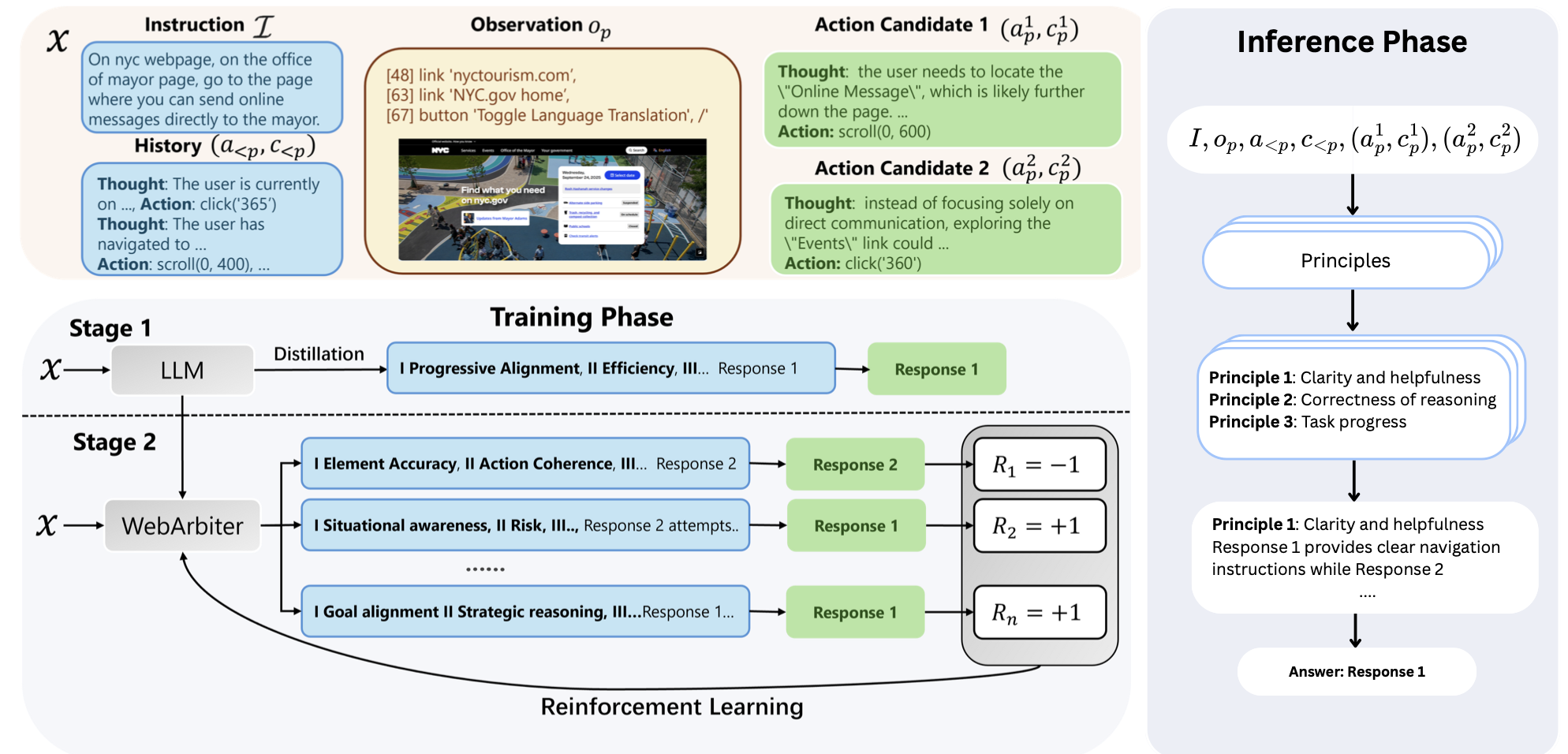

ICLR 2026WebArbiter is a reasoning-first, principle-inducing WebPRM that frames process reward modeling as structured text generation, producing auditable justifications with a final preference verdict to select the action that best advances task completion, trained via reasoning distillation and verifiable-reward RL for robust, inference-time scalable decision-making on long-horizon web tasks.Process Reward Modeling Reasoning-Based Reward Modeling Criterion-Induced Evaluation Web Agents

ICLR 2026WebArbiter is a reasoning-first, principle-inducing WebPRM that frames process reward modeling as structured text generation, producing auditable justifications with a final preference verdict to select the action that best advances task completion, trained via reasoning distillation and verifiable-reward RL for robust, inference-time scalable decision-making on long-horizon web tasks.Process Reward Modeling Reasoning-Based Reward Modeling Criterion-Induced Evaluation Web Agents -

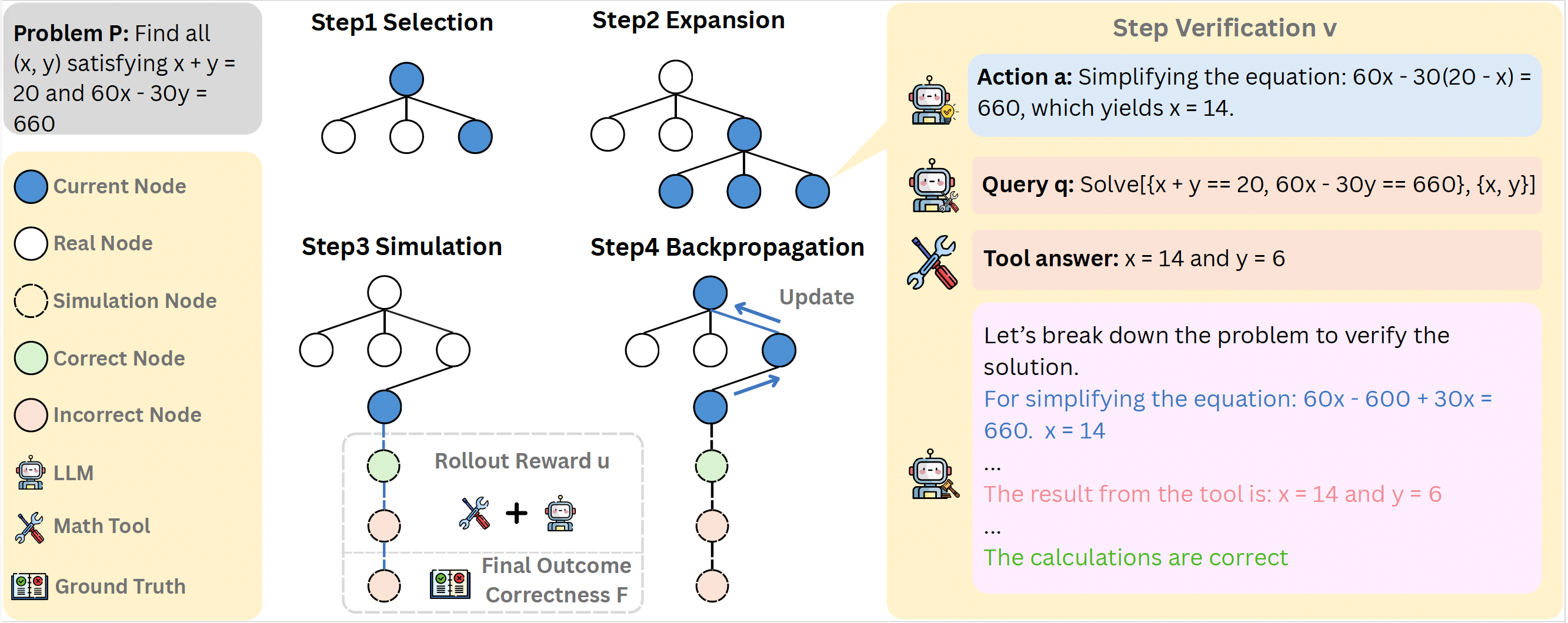

NeurIPS 2025 Workshop LAWGroundedPRM is a tree-guided and fidelity-aware framework for automatic process reward modeling that combines MCTS-guided path construction with tool-based step verification. It achieves SOTA performance with only 10% of the training data compared to existing auto-labeled methods, demonstrating exceptional sample efficiency and superior reasoning quality.Process Reward Modeling Multi-Step Reasoning Monte Carlo Tree Search Tool Verification

NeurIPS 2025 Workshop LAWGroundedPRM is a tree-guided and fidelity-aware framework for automatic process reward modeling that combines MCTS-guided path construction with tool-based step verification. It achieves SOTA performance with only 10% of the training data compared to existing auto-labeled methods, demonstrating exceptional sample efficiency and superior reasoning quality.Process Reward Modeling Multi-Step Reasoning Monte Carlo Tree Search Tool Verification

Learning Level — Adaptive and Federated Learning for Scalable Multimodal Intelligence

-

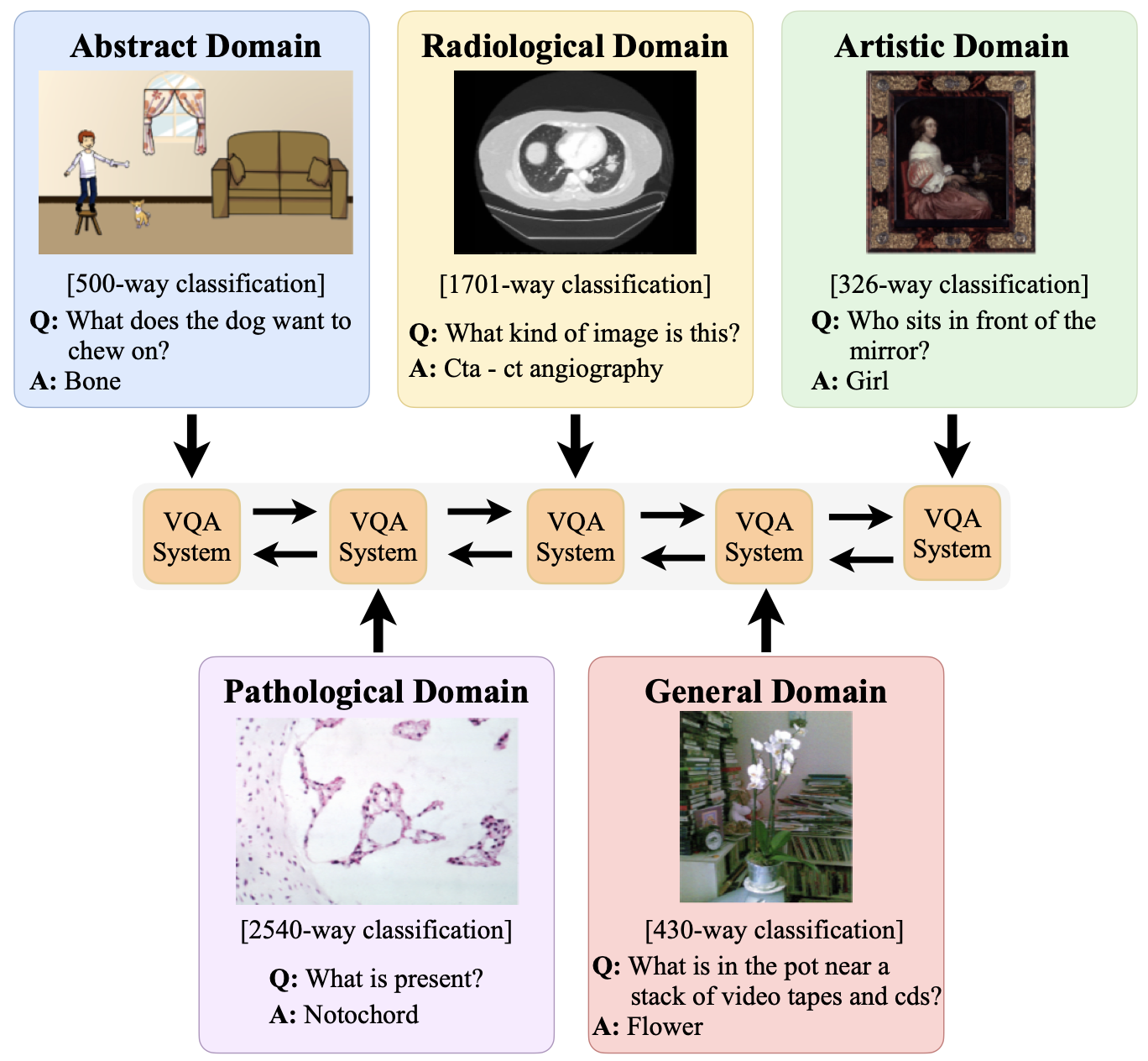

CL-CrossVQA: A Continual Learning Benchmark for Cross-Domain Visual Question AnsweringWACV 2025CL-CrossVQA is a benchmark for continual learning in cross-domain visual question answering that evaluates the ability of vision-language models to retain knowledge while adapting to new domains. The benchmark highlights key challenges in representation retention and cross-domain generalization, providing a systematic framework for assessing model capabilities in maintaining previously learned knowledge when encountering new visual domains and question types.Continual Learning Multimodal Reasoning Cross-Domain Robustness Generalization

CL-CrossVQA: A Continual Learning Benchmark for Cross-Domain Visual Question AnsweringWACV 2025CL-CrossVQA is a benchmark for continual learning in cross-domain visual question answering that evaluates the ability of vision-language models to retain knowledge while adapting to new domains. The benchmark highlights key challenges in representation retention and cross-domain generalization, providing a systematic framework for assessing model capabilities in maintaining previously learned knowledge when encountering new visual domains and question types.Continual Learning Multimodal Reasoning Cross-Domain Robustness Generalization -

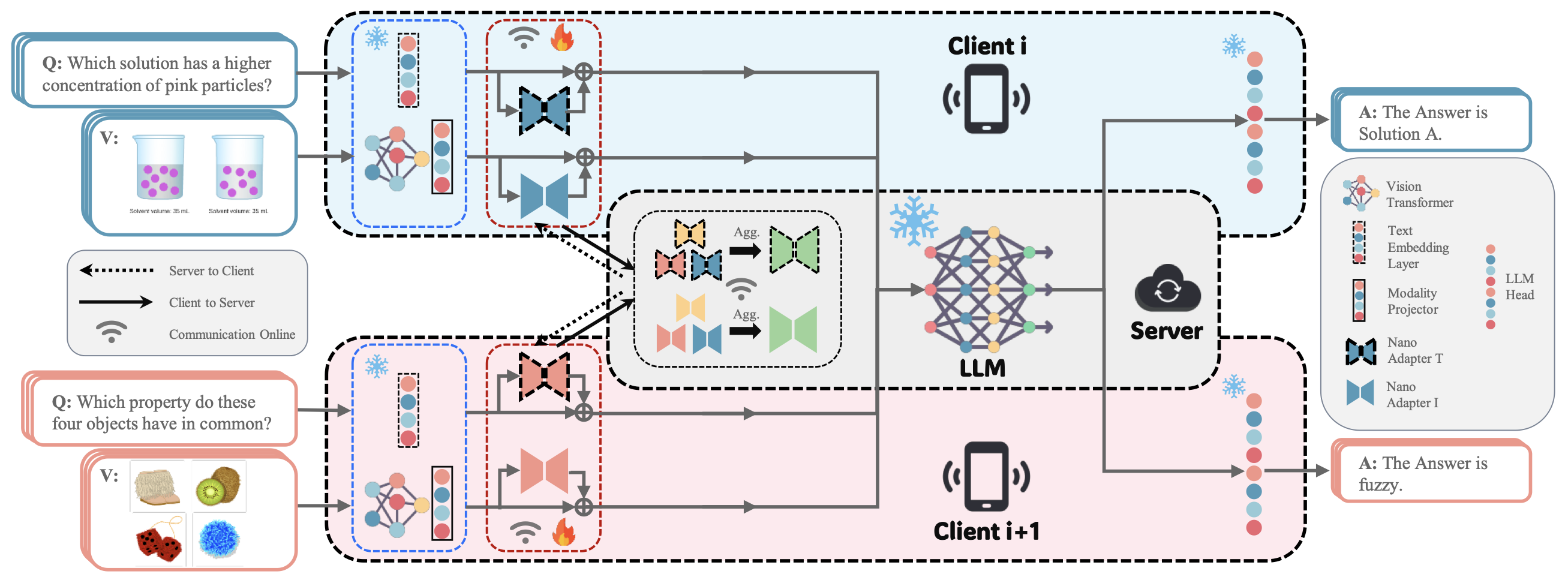

FedNano: Toward Lightweight Federated Tuning for Pretrained Multimodal Large Language ModelsUnder Review, 2025FedNano is a lightweight federated tuning framework for pretrained multimodal large language models that drastically reduces client-side computational cost while maintaining strong reasoning and adaptation performance. The framework enables efficient federated fine-tuning of multimodal models, achieving scalable and privacy-preserving multimodal intelligence with minimal computational overhead on client devices.Federated Learning Multimodal Adaptation Efficient Fine-Tuning Scalable Intelligence

FedNano: Toward Lightweight Federated Tuning for Pretrained Multimodal Large Language ModelsUnder Review, 2025FedNano is a lightweight federated tuning framework for pretrained multimodal large language models that drastically reduces client-side computational cost while maintaining strong reasoning and adaptation performance. The framework enables efficient federated fine-tuning of multimodal models, achieving scalable and privacy-preserving multimodal intelligence with minimal computational overhead on client devices.Federated Learning Multimodal Adaptation Efficient Fine-Tuning Scalable Intelligence -

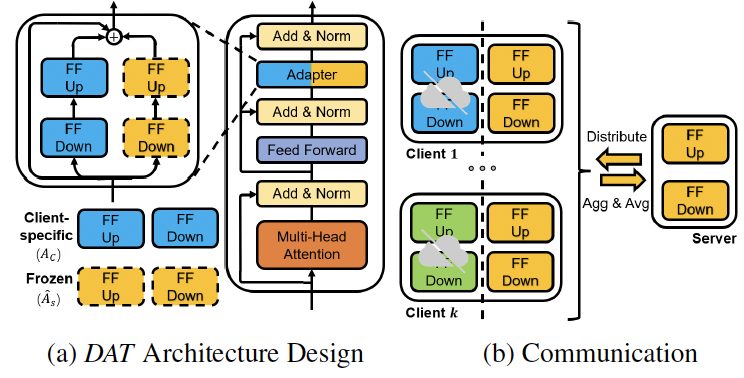

FedDAT: An Approach for Foundation Model Finetuning in Multi-Modal Heterogeneous Federated LearningAAAI 2024FedDAT is an approach for foundation model finetuning in multi-modal heterogeneous federated learning that addresses challenges in adapting large foundation models across diverse data modalities and client distributions. The method enables efficient federated fine-tuning while handling heterogeneity in both data modalities and client data distributions, enabling scalable and privacy-preserving adaptation of foundation models.Heterogeneous Federated Learning Multimodal Finetuning Foundation Model Adaptation

FedDAT: An Approach for Foundation Model Finetuning in Multi-Modal Heterogeneous Federated LearningAAAI 2024FedDAT is an approach for foundation model finetuning in multi-modal heterogeneous federated learning that addresses challenges in adapting large foundation models across diverse data modalities and client distributions. The method enables efficient federated fine-tuning while handling heterogeneity in both data modalities and client data distributions, enabling scalable and privacy-preserving adaptation of foundation models.Heterogeneous Federated Learning Multimodal Finetuning Foundation Model Adaptation